National Security Is Not International Security: A Critique of AGI Realism

```html

Navigating the AI Arms Race: Beyond AGI Realism

The Illusion of Military Dominance Through Superintelligence

The narrative of superintelligence guaranteeing military supremacy overlooks the nuances of real-world conflict. History teaches us that wars are rarely all-out clashes where superior technology reigns supreme. Limited wars, defined by specific political objectives and resource constraints, are far more common. Even a technologically superior force, like the US in Afghanistan, can be stymied by factors like political will and domestic sentiment.

Overemphasizing the role of AI in hypothetical total war scenarios is not only counterproductive but potentially dangerous. It risks escalating tensions and driving development towards autonomous AGI capabilities, magnifying the very risks we seek to avoid. A balanced approach, prioritizing safety and flexibility, is crucial.

Reframing AI Security: Beyond Nationalistic Narratives

The prevailing narrative casts superintelligence as an inherent national security threat, necessitating securitization. But security threats are socially constructed, not preordained. Over-securitization, driven by the AGI realist paradigm, becomes a self-fulfilling prophecy, accelerating an arms race that encompasses all AI advancements, regardless of their actual risk profile.

The example of the "nuclear taboo" demonstrates the power of norms to constrain even the most destructive technologies. We must similarly shape the narrative around AI, differentiating between types of capabilities and development paths. For instance, the open-sourcing of frontier AI models poses a far greater immediate risk than the mere existence of a powerful AI system within a specific nation.

The Limits of Hegemony in the Age of AI

The pursuit of US hegemony in AI, a cornerstone of AGI realism, is fraught with peril. History is replete with examples of hegemonic overreach provoking counterbalancing dynamics and escalating conflict. Moreover, true hegemony requires far more than military might – it demands economic influence, institutional legitimacy, and cultural soft power.

Even at its peak, US power has proven insufficient to unilaterally shape global order. Expecting a temporary AI lead to translate into enduring dominance, especially without a clear disjunction between highly capable AI and superintelligence, is unrealistic. This could lead to reliance on military coercion for AI safety, exacerbating tensions in an already unstable global environment.

“If China can't get millions of chips, we'll (at least temporarily) live in a unipolar world…” - Dario Amodei, CEO of Anthropic. This casual assertion of unipolarity overlooks its potential for instability and the fundamental limitations of hegemony.

The Perils of an AI Arms Race

Winning an AI arms race doesn't equate to achieving hegemony. True global leadership demands more than military dominance. Focusing solely on an AI advantage risks alienating allies, destabilizing existing alliances, and even provoking rival alignments. The UK's departure from the EU serves as a potent reminder of the fragility of alliances, even among nations with shared values.

The Cold War Analogy: A Recipe for Disaster?

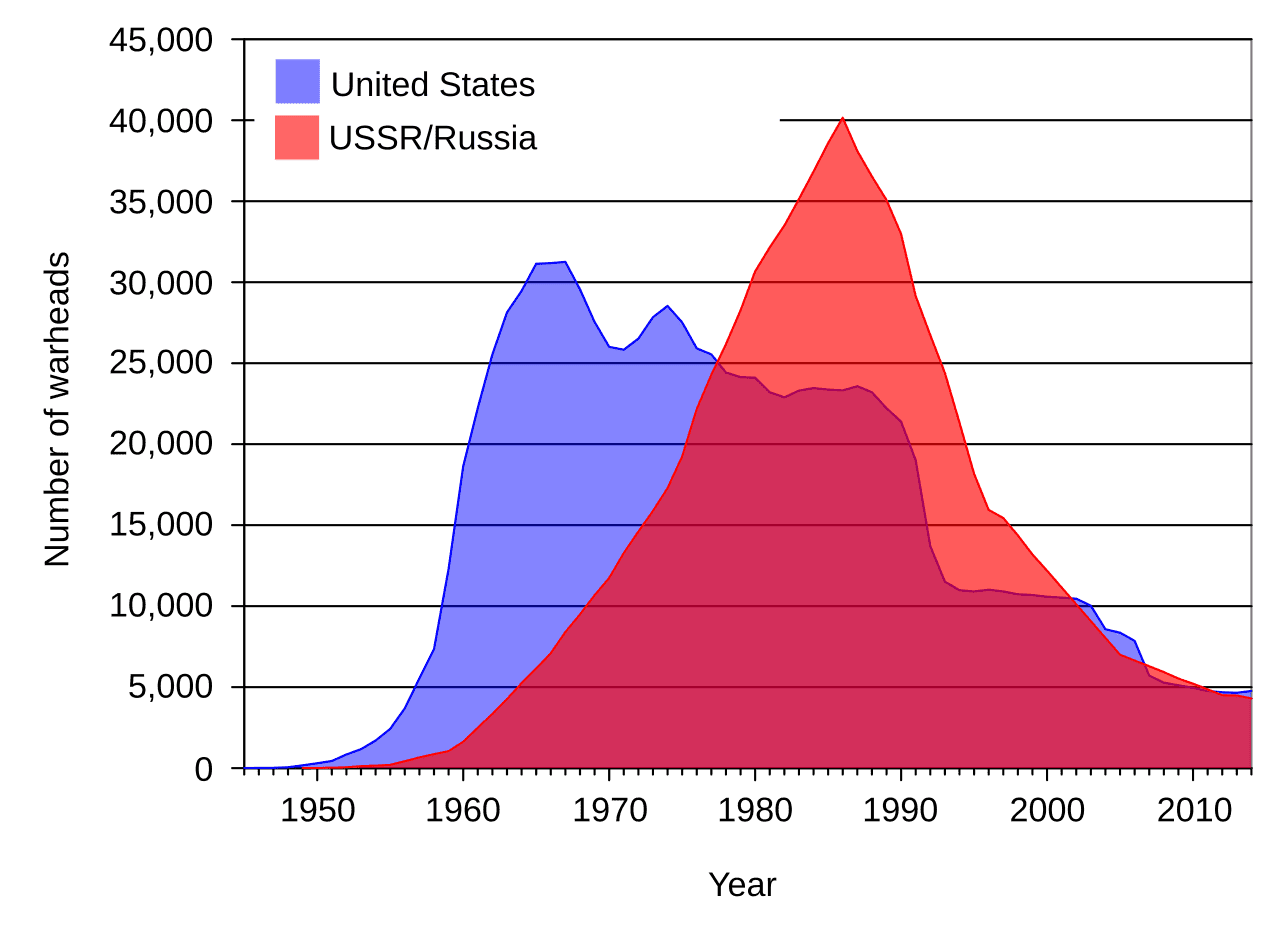

Comparing the current AI landscape to the Cold War and advocating for nuclear-style deterrence is a dangerous fallacy. The Cold War was a period of heightened existential risk, punctuated by near misses that owed as much to luck as to strategic brilliance. Replicating this dynamic with AI risks escalating the proliferation of dangerous capabilities and increasing the likelihood of autonomous AGI risks.

"The main—perhaps the only—hope we have is that an alliance of democracies has a healthy lead over adversarial powers." - Leopold Aschenbrenner, OpenAI. This statement neglects the lessons of history, where such a lead has rarely translated into lasting peace.

Beyond Export Controls: Embracing Collaborative Governance

Export controls, while necessary to constrain dangerous capabilities, should not preclude collaborative governance. Leveraging economic interdependence and shared interests can foster international cooperation on AI safety. The history of space policy, with its joint missions and international agreements, offers a valuable example.

The Power of Norms: Shaping a Safer AI Future

Long-term stability relies on robust norms. While fickle and difficult to enforce, norms play a crucial role in shaping state behavior. Overemphasis on military dominance in the AI narrative risks sidelining critical norm-forming efforts, such as international cooperation and nuanced securitization strategies.

Incremental Steps Towards AI Safety

There is no silver bullet for AI safety. A multifaceted approach, comprising scope-sensitive policies targeting specific risks, is crucial. This includes tighter controls in AI-bio and nuclear domains, hardware restrictions, and international verification regimes. While each measure may have limited impact individually, their cumulative effect can significantly reduce catastrophic risks without stifling progress.

Beyond AGI Realism: Embracing a Balanced Approach

The pursuit of a US-centric victory in the AI race is a perilous path. It risks escalating an arms race, alienating allies, and destabilizing global governance. A balanced approach, combining norm-forming, incremental safety measures, robust export controls, and international cooperation, offers a more sustainable and less dangerous route to a safer AI future.