Narratives as catalysts of catastrophic trajectories

```html

The Narrative of Power: From Nuclear Arms to Artificial Intelligence

The Spectre of Existential Threat: A Shared Narrative

From the chilling dawn of the nuclear age to the rapid rise of artificial intelligence, humanity has grappled with the narrative of transformative technology as both a potential savior and a catastrophic risk. This exploration delves into the power of narratives, examining how they shape our approach to these technologies, drawing parallels between the Cold War nuclear arms race and the current AI landscape.

The Manhattan Project Parallel: A Race Against Whom?

The Manhattan Project, born from the fear of a Nazi Germany armed with nuclear weapons, set a precedent for a narrative of technological one-upmanship. This narrative, echoing in today's AI discourse, posits that building transformative AI first is the only path to global security. Former OpenAI researcher Leopold Aschenbrenner, for example, argues that the U.S. must win the AI race against China to avoid a future dictated by totalitarian values. This "build it first or lose forever" mentality mirrors the flawed logic of the Manhattan Project, which ultimately produced a weapon even after its initial justification—a German nuclear threat—vanished.

This historical precedent raises critical questions. Are we, like our Cold War predecessors, trapped in a narrative that blinds us to alternative paths? Are we, driven by fear and ambition, sleepwalking towards a potential catastrophe?

The Nuclear Narrative's Evolution: From Cooperation to Brinkmanship

The post-World War II era initially witnessed a flicker of hope for international cooperation on nuclear control. However, this nascent narrative was swiftly eclipsed by one of national security and nuclear supremacy, fueled by escalating Cold War tensions and a media landscape that amplified fear and championed military might.

From the ashes of Hiroshima and Nagasaki, a global nuclear arms race ignited. The drive for "bigger and better" bombs propelled the world towards the brink of annihilation during the Cuban Missile Crisis, a chilling reminder of the narrative's perilous power.

Echoes of the Past: AI and the New Technocratic Elite

Today, a similar narrative is unfolding in the realm of AI. A small group of influential figures, the leaders of major AI labs, shape the discourse, often emphasizing the strategic importance of AI dominance. This mirrors the role played by figures like Edward Teller and Curtis LeMay during the Cold War, who championed aggressive nuclear development with political and media backing.

Yet, dissenting voices also emerge, reminiscent of the scientists who cautioned against the unchecked pursuit of nuclear weapons. Figures like Geoffrey Hinton and Yoshua Bengio, pioneers of AI research, now warn of the existential risks posed by advanced AI systems. Will their concerns, like those of their predecessors, be marginalized by the dominant narrative of technological inevitability?

The Media's Role: Shaping and Reflecting Public Opinion

The media plays a crucial role in shaping and reinforcing these narratives. Analysis of historical newspaper archives reveals a dramatic shift in the media's stance on nuclear weapons, from initial support for international control to enthusiastic advocacy for nuclear supremacy. A similar pattern seems to be emerging in the coverage of AI, raising concerns about the media's potential to amplify narratives of technological determinism and downplay calls for caution and international cooperation.

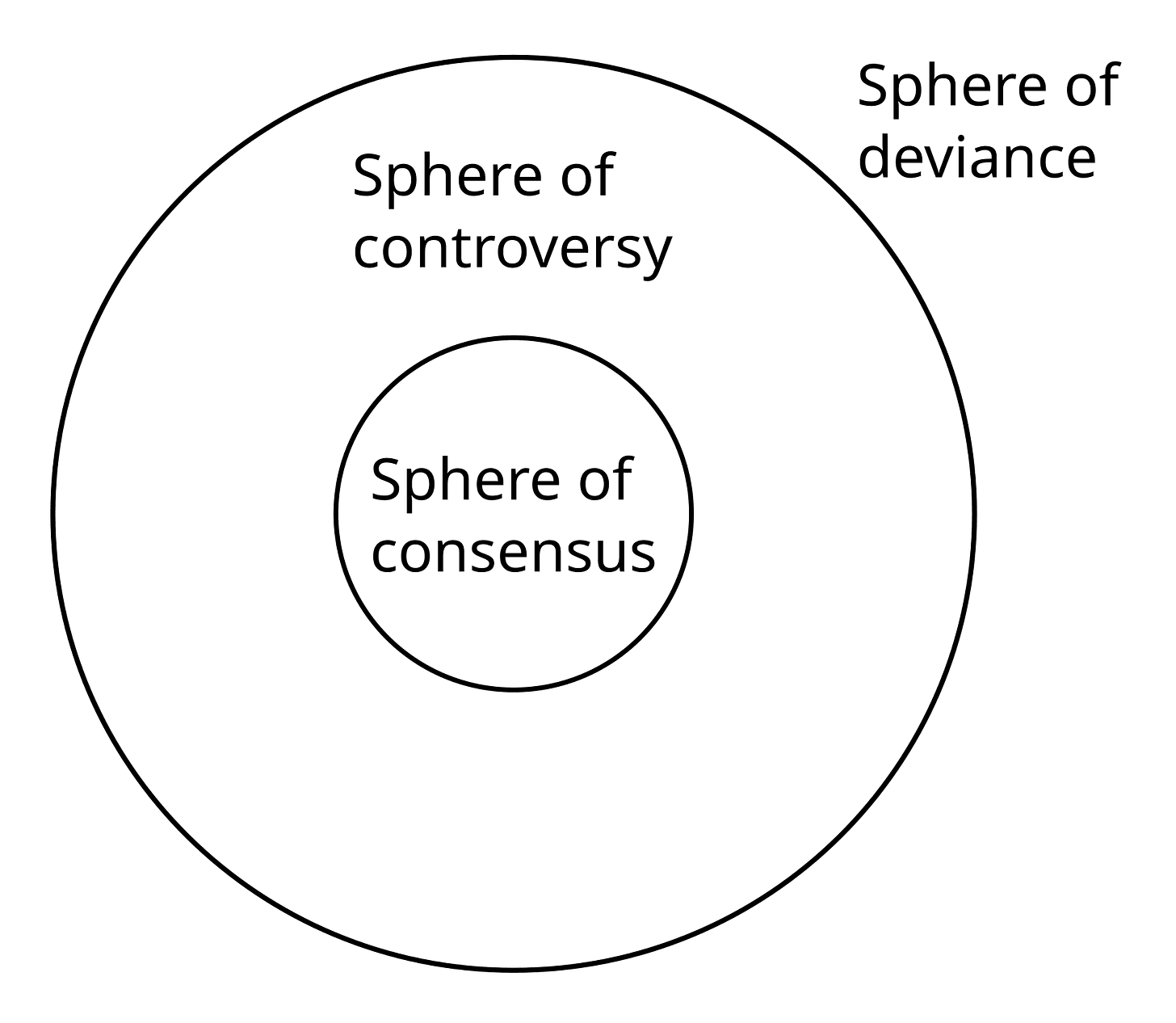

Daniel C. Hallin’s theory of spheres of political discourse offers a valuable framework for understanding these dynamics. The shift from consensus to deviance, as seen with nuclear discourse, poses a critical challenge for AI governance. We must ensure safety concerns don't become sidelined.

Learning from History: Charting a Different Course for AI

The historical parallels between the nuclear age and the rise of AI offer invaluable lessons. We must learn from the mistakes of the past and resist the temptation to repeat them. This requires a concerted effort to challenge dominant narratives, amplify dissenting voices, and prioritize international cooperation over a potentially disastrous race for technological dominance.

Unlike our predecessors in the nuclear age, we have the benefit of hindsight. We must leverage this advantage, along with the current media receptiveness to safety concerns, to forge a different path, ensuring that technology serves humanity, not the other way around.